Critical Chain / Agile hybrid

Also known as Critical Path. We combined the critical chain methodology with the agile methodology.

What is Agile?

Agile management, or agile process management, or simply agile refers to an iterative, incremental method of managing the design and build activities of engineering, information technology and other business areas that aim to provide new product or service development in a highly flexible and interactive manner; an example is its application in Scrum, an original form of agile software development.[1] It requires capable individuals from the relevant business, openness to consistent customer input, and management openness to non-hierarchical forms of leadership. [1] The Agile Manifesto, is centered on four values:

- Communication with parties is more important than standard procedures and tools.

- Focus on delivering a working application and less focus on providing thorough documentation.

- Collaborate more with clients.

- Be open to changes instead of freezing the scope of the work.[2]

Modified

What is Critical Chain?

As opposed to waterfall and agile project management, that focus more on schedules and tasks, the critical chain project management methodology is geared more towards solving resource problems. Each project has a certain set of core elements, called a critical chain (sometimes referred as critical path), that establish a project’s minimum timeline. The critical chain methodology devotes adequate resources to this critical chain while devoting enough resources to other tasks such that they can run concurrently, but still have enough of a buffer to reassign resources when needed. This setup is ideal for resource-heavy teams, or for those who have enough flexibility in their team members’ respective skill sets.

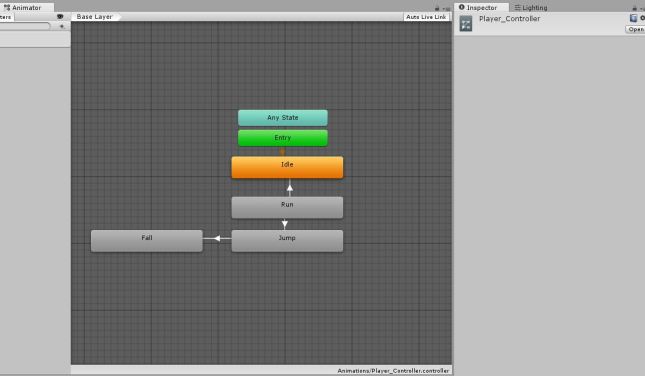

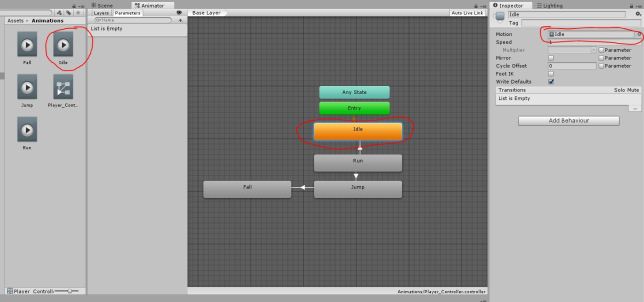

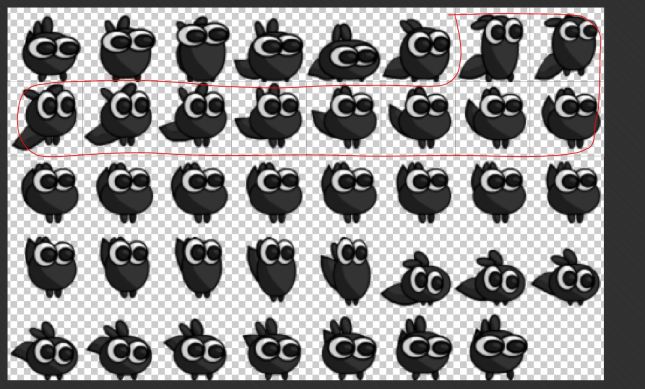

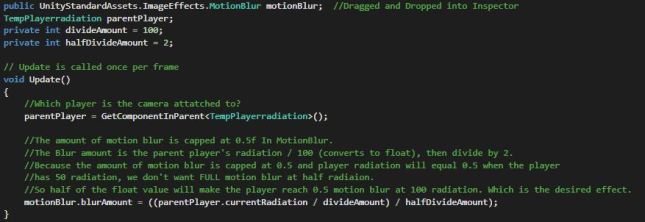

Going in to create a serious game for NI, we had the mindset of using an agile project methodology. Purely because this is the first time that the team and I had “worked” for someone else. Although NI were our ‘client’ and seeking our expertise, they are the ones who know the content inside and out and what the apps intention is. Ultimately we are following their lead, and they ours. Leaning on each others expertise. It was never going to be a straight forward project for so many reasons that i’ll get to talking about in the post-mortem. We knew there was a start and end date, but the in between was bound to change. We were never going to know the exact timeline so it needed to be flexible. We’d also have to account change for collaboration or meetings between New Intelligence and the team. We ended up adapting and morphing an agile methodology with the critical chain methodology. We needed critical chain because we did have core elements that needed attention, but the the timing of which changes. Also because Agile puts more of a focus on delivering a working application than documentation, but this project still relied VERY heavily on documentation.

- A – So we knew what we were doing.

- B – So we didn’t forget what we were doing.

- C – We needed to figure out the systems and what exactly is going into this app.

- D – We needed others to understand what we were doing.

- E – What if we were going to continue working on this after the delivery? What if someone else is?

The critical chain methodology helps us identify the most urgent task and work towards is. It also helps us identify deadlines that we need to work towards and set focus on that. We know that there are milestones and that those milestones might change. The milestones content might change, or the time of achieving this milestone might be pushed forward or back. Critical chain also helps us adequately assign our valuable resources to work towards specific outcomes whilst still assigning resources to other tasks that can progress side by side without depending on each other to progress forward.

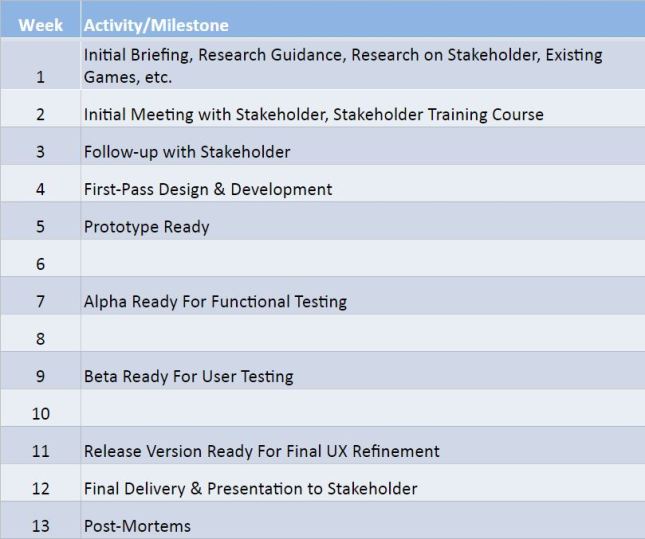

The projected timeline

To wrap up my points above, this has been the first project where I’ve actively stepped down from the project management role. However, I’ve happily shared all the tips and tricks that I’ve learnt along the way, to help better enrich the knowledge of our project manager in this instance. Ultimately it’s his say on how the project will be run, and what our approach to tasks and deadlines will be. My personal mentality is that, he says we’re doing it this way, and I say “okay”.

Until next time –

Bibliography

Text

5 Effective Project Management Methodologies and When to Use Them. (2017). Explore.easyprojects.net. Retrieved from https://explore.easyprojects.net/blog/project-management-methodologies

Agile management. (2017). En.wikipedia.org. Retrieved from https://en.wikipedia.org/wiki/Agile_management